Exploration and Research

Exploration and Research

After completing the state-of-the-art review, we identified several exploration paths, such as implementing an iCaRL model or other architectures suitable for incremental learning. Additionally, the CFEE (Compound Facial Expressions of Emotion) dataset was used in our project as a relevant base for training and evaluating our models.

CFEE (Compound Facial Expressions of Emotion)

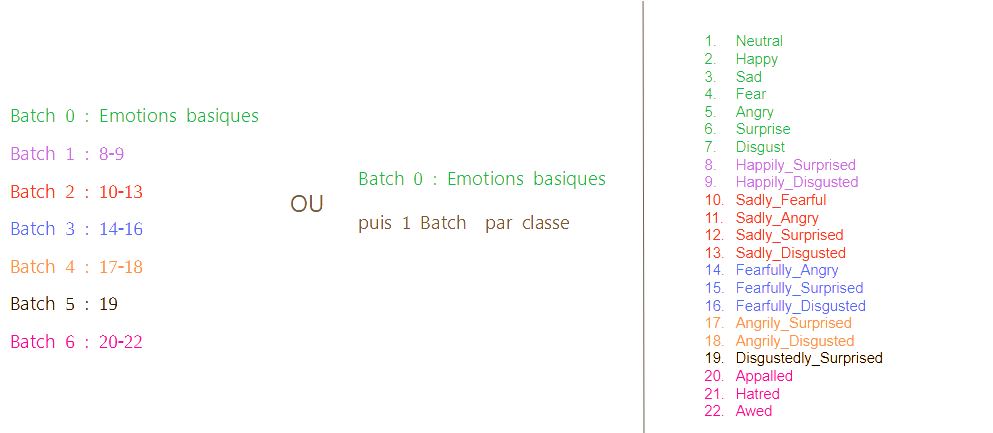

The CFEE dataset consists of acted emotion images performed by volunteers. This introduces a possible bias, as the emotions are not spontaneous. It contains 22 emotions: the 6 basic Ekman emotions, the neutral emotion, and 15 complex emotions such as Happily_Disgusted or Sadly_Surprised, which are combinations of basic emotions.

Source: Compound Facial Expression Recognition Based on Highway CNN

This dataset is used to train and test our emotion recognition models incrementally.

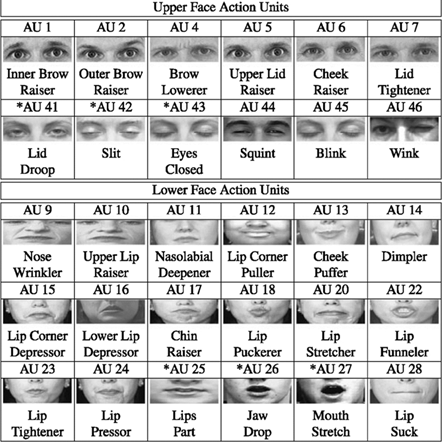

Action Unit (AU)

Action Units (AU) are measurements of facial muscle activation. They can be binary (on/off) or expressed as intensity values. AUs are automatically extracted using the OpenFace tool. Their advantages are that they are interpretable, compact, and well-suited for incremental models due to their low dimensionality.

Source: A comprehensive survey on automatic facial action unit analysis

iCaRL

The iCaRL model was mentioned in our state-of-the-art review as a reference for class-incremental learning. We explored its potential for our use case. However, its implementation requires complex management of exemplar sets, knowledge distillation, and custom architecture. Due to these constraints, iCaRL was not selected in our experimental pipeline in favor of simpler and more compatible solutions.

Autoencoder

Autoencoders are unsupervised neural networks capable of compressing and reconstructing data. We considered their use to extract compact features from images or to generate synthetic data (pseudo-rehearsal). This approach reduces storage needs while preserving useful representations for incremental classification.

Batching

In our project, batching refers to dividing the dataset into successive class batches. This simulates a class-incremental learning scenario, where new classes are introduced to the model progressively without access to previous data.

Two batching strategies were considered:

- A grouped batch, starting with basic emotions, followed by batches introducing groups of compound emotions.

- A unit batch, where each new class is introduced individually, simulating a more granular and controlled learning process.

This approach enables us to:

- Evaluate the model’s robustness to catastrophic forgetting.

- Measure the impact of each added class on overall performance.

- Test techniques such as rehearsal and active learning in a controlled setting.

Existing Incremental Models

We tested several incremental models available in the scikit-learn library, including:

- Multinomial Naive Bayes (MNB)

- Perceptron (SGDClassifier)

- Passive-Aggressive Classifier (PAC)

- Bernoulli Naive Bayes (BNB)

- Random Forests, adapted for batch mode

- MLPClassifier, using controlled incremental updates

These models served as baselines, allowing rapid evaluation of incremental performance using AU data and feature vectors extracted from CNNs.