Implementation

Implementation

We first compared several non-incremental models to establish a performance baseline. The most effective model across the 22 emotion classes was a Random Forest, achieving a score of 0.69. This step helped us evaluate how incremental models perform in our context. As previously mentioned, we use the CFEE dataset and extract Action Units (AU) using OpenFace.

In addition to AUs, we extracted advanced feature vectors using pre-trained models such as GoogleNet, ResNet, and MobileNet. These features enrich the input of classification models by providing more complex visual representations than AUs alone.

Incremental Models

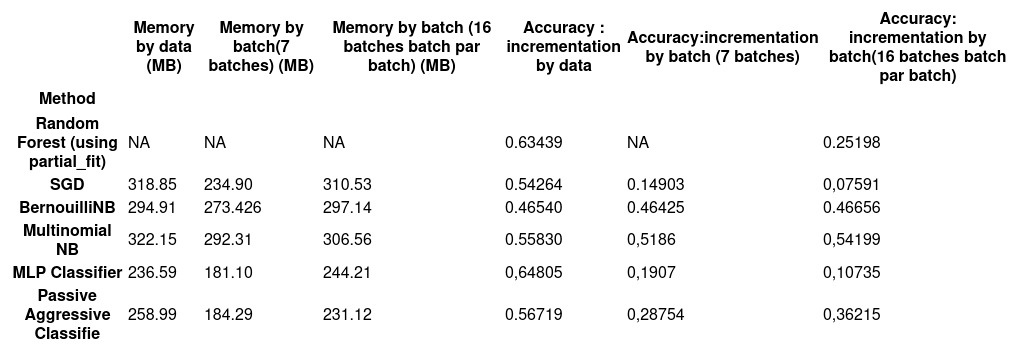

For incremental learning, we used models from the scikit-learn library that support data input in batches, including:

- Random Forest (adapted via

partial_fit) - Perceptron (SGDClassifier)

- Bernoulli Naive Bayes (BNB)

- Multinomial Naive Bayes (MNB)

- MLPClassifier (iterative batch updates)

- Passive-Aggressive Classifier (PAC)

The performance of these models using only AUs is shown below:

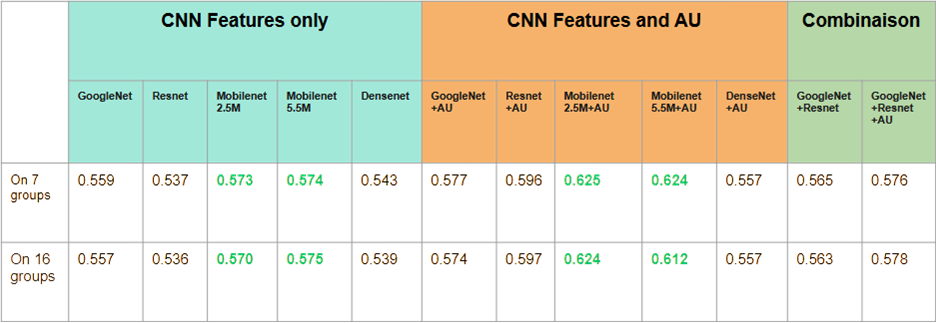

These models were then tested using enriched input: AU + CNN features, to evaluate the impact of this combination.

Pre-trained Models

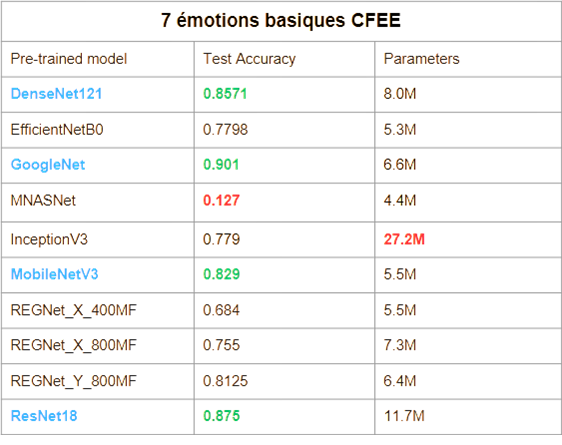

We evaluated several CNNs pre-trained on ImageNet, applied to the 7 basic emotions (6 Ekman emotions + neutral). This restriction follows the incremental learning setup, where the model doesn’t have access to all classes from the start.

The purpose is to use these CNNs solely as feature extractors, removing their final classification layer.

The most effective models identified were:

- DenseNet121

- GoogleNet

- MobileNet v3

- ResNet-18

These networks convert CFEE images into feature vectors, and the final classification layer is removed. The resulting vectors are then concatenated with AU data and used as input for incremental models.

Final Implementation

The selected final approach combines Action Units with CNN features, using Multinomial Naive Bayes (MNB) as the classifier. This model gave the best results in our incremental experiments.

This architecture offers a good trade-off between accuracy, implementation simplicity, memory efficiency, and compatibility with class-incremental learning.

The final performance of the MNB model with different feature types is shown below:

Conclusion and Perspectives

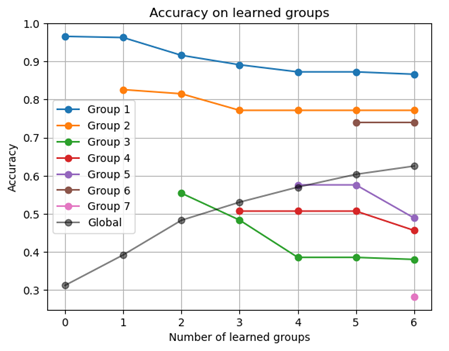

Our incremental learning approach effectively addressed emotion recognition using the CFEE dataset, combining Action Units from OpenFace and visual features from pre-trained CNNs. The Multinomial Naive Bayes classifier emerged as the best fit for our setup, offering a solid balance between performance and simplicity.

However, several limitations were identified:

- A catastrophic forgetting rate of about 10% on basic emotions.

- Specific groups (3, 4, 5, 7) are harder to distinguish, likely due to less discriminative features.

- Significant confusion between certain compound emotions, suggesting better class separation is needed.

These observations lead to promising future work directions:

- Develop more refined methods to separate commonly confused classes, via deeper latent representation analysis.

- Dynamically adapt the feature extractor CNN as new batches are introduced.

- Implement intelligent rehearsal, selecting the most informative examples to retain.

- Experiment with generative models (e.g., GANs, VAEs) for pseudo-rehearsal, to limit memory loss without storing real data.